FLaP

[Forschen und Lernen aus Prüfdaten]

The German Research Center for Artificial Intelligence (DFKI), the world's largest non-profit research center for AI, and IAV, one of the automotive industry's most successful development partners, joined forces by means of the "Learning from Test Data" Research Laboratory (FLaP). In this collaborative environment at DFKI in Kaiserslautern, special methods of Artificial Intelligence are researched and developed for use in test procedures by the automotive industry. State-of-the-art machine learning technologies are developed, such as deep learning models and tools for time series analysis.

IAV Digitalization Award

Submission Deadline: Sep 30, 2023

Your thesis is pushing the frontier of digitalization? Your algorithm is more intelligent than other’s? You are able to steer processes on a new level? You strengthen our trust in the ability of autonomous systems? You are pushing the boundaries of machine learning to a new frontier? Submit your work to us and be rewarded with 1500€ for the best contribution!Our Projects

This is an overview of selected projects developed by our team of researchers:

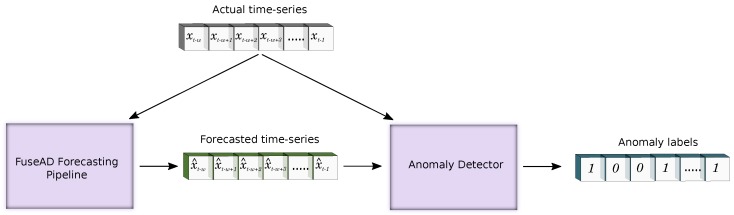

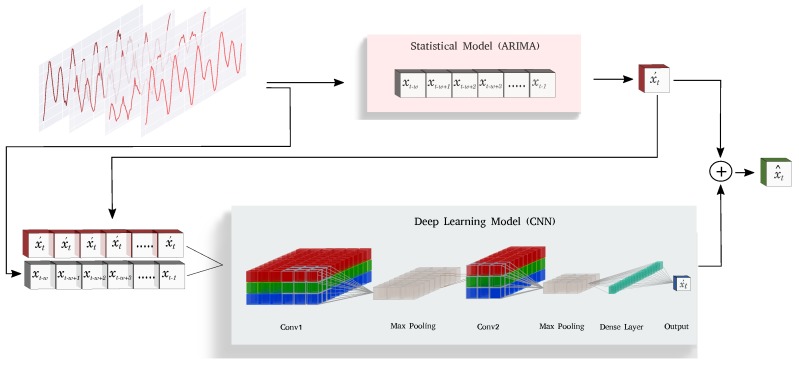

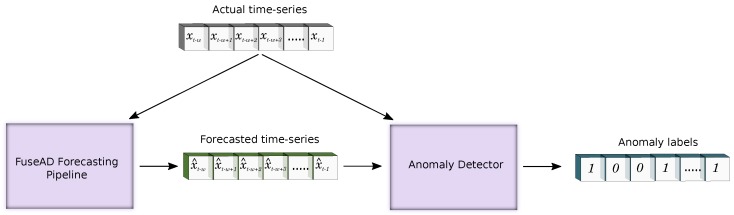

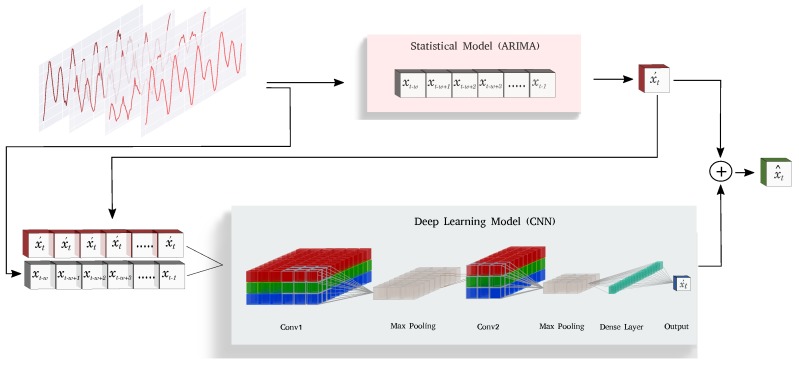

FuseAD

The need for robust unsupervised anomaly detection in streaming data is increasing rapidly in the current era of smart devices, where enormous data are gathered from numerous sensors. These sensors record the internal state of a machine, the external environment, and the interaction of machines with other machines and humans. It is of prime importance to leverage this information in order to minimize downtime of machines, or even avoid downtime completely by constant monitoring. Since each device generates a different type of streaming data, it is normally the case that a specific kind of anomaly detection technique performs better than the others depending on the data type. For some types of data and use-cases, statistical anomaly detection techniques work better, whereas for others, deep learning-based techniques are preferred. In this paper, we present a novel anomaly detection technique, FuseAD, which takes advantage of both statistical and deep-learning-based approaches by fusing them together in a residual fashion. The obtained results show an increase in area under the curve (AUC) as compared to state-of-the-art anomaly detection methods when FuseAD is tested on a publicly available dataset (Yahoo Webscope benchmark). The obtained results advocate that this fusion-based technique can obtain the best of both worlds by combining their strengths and complementing their weaknesses. We also perform an ablation study to quantify the contribution of the individual components in FuseAD, i.e., the statistical ARIMA model as well as the deep-learning-based convolutional neural network (CNN) model.

Read the paper!

Probabilistic Time Series Forecsating

This is a collection of projects: CRPS-Sum, ForGAN, If you like it GAN it!, Multistep Forecasting

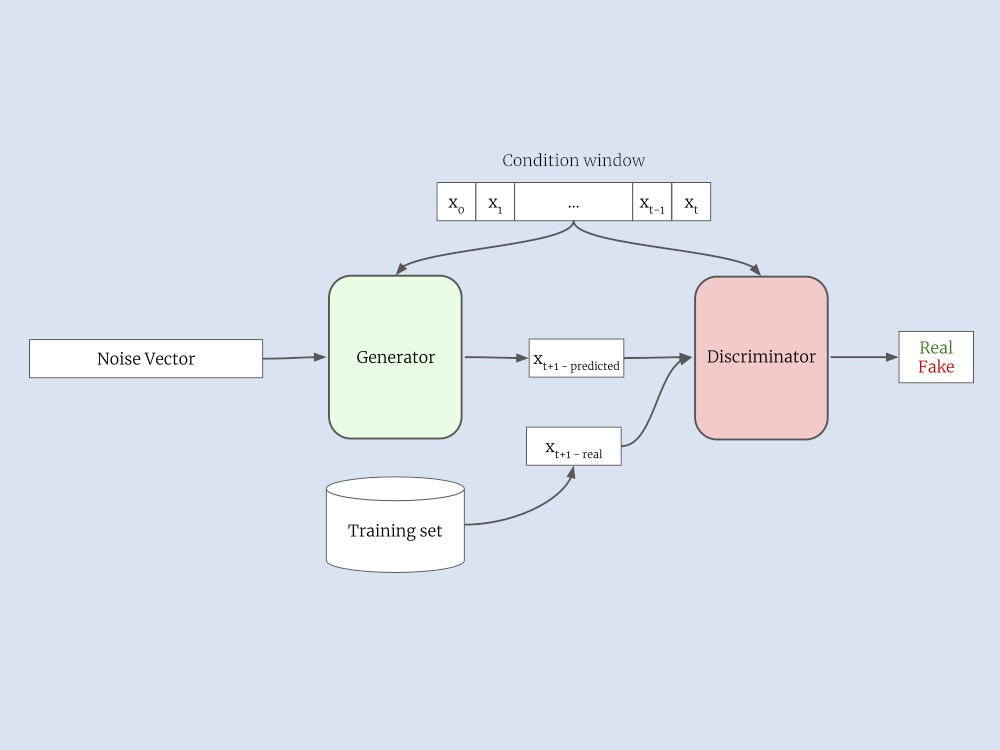

ForGAN

Probabilistic Forecasting of Sensory Data with Generative Adversarial Networks - ForGAN

ForGAN is one step ahead probabilistic forecasting model. It utilizes the power of the conditional generative adversarial network to learn the probability distribution of future values given the previous values. The following figure presents ForGAN architecture.

Visit the ForGAN repository!

Read the ForGAN paper!

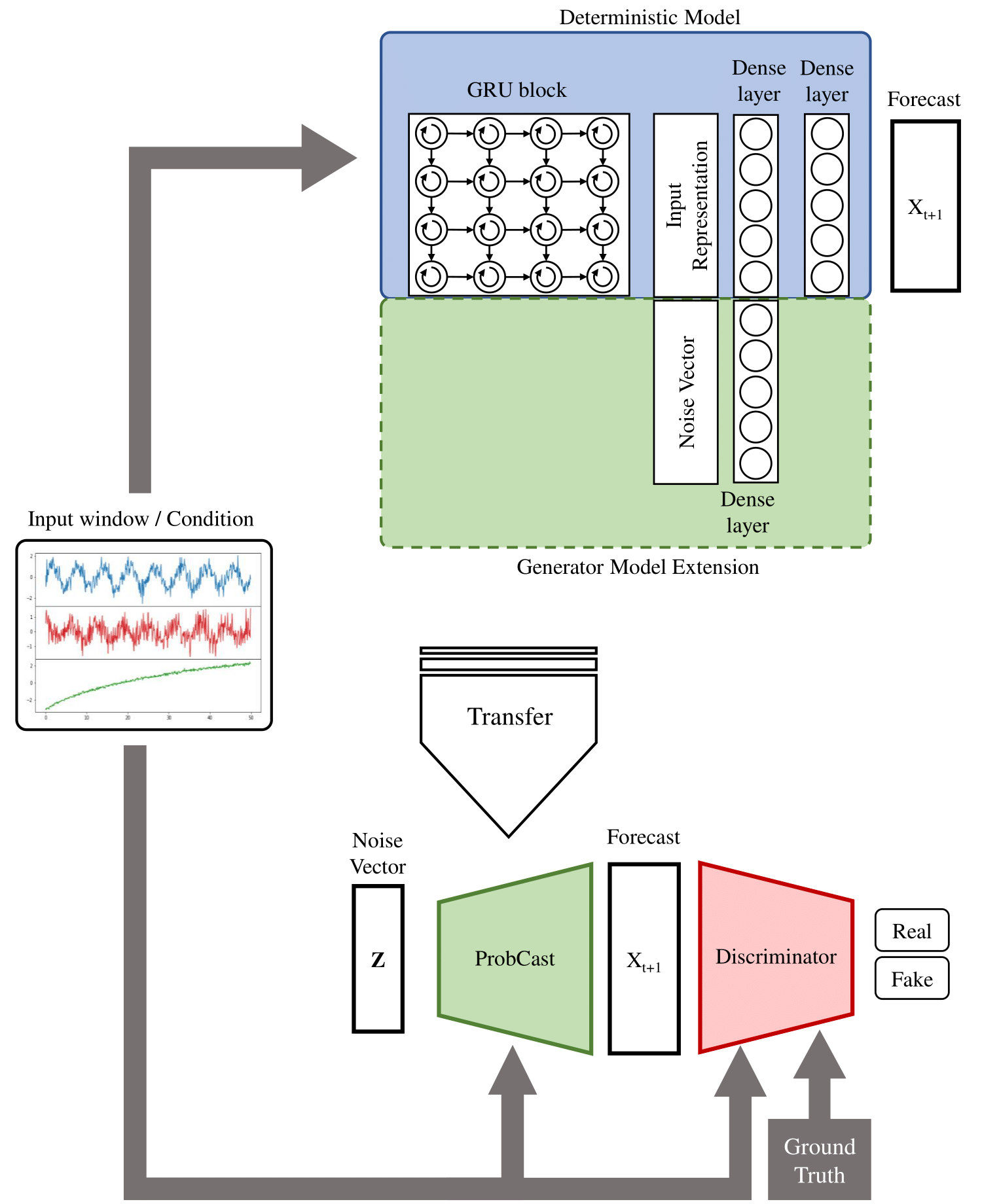

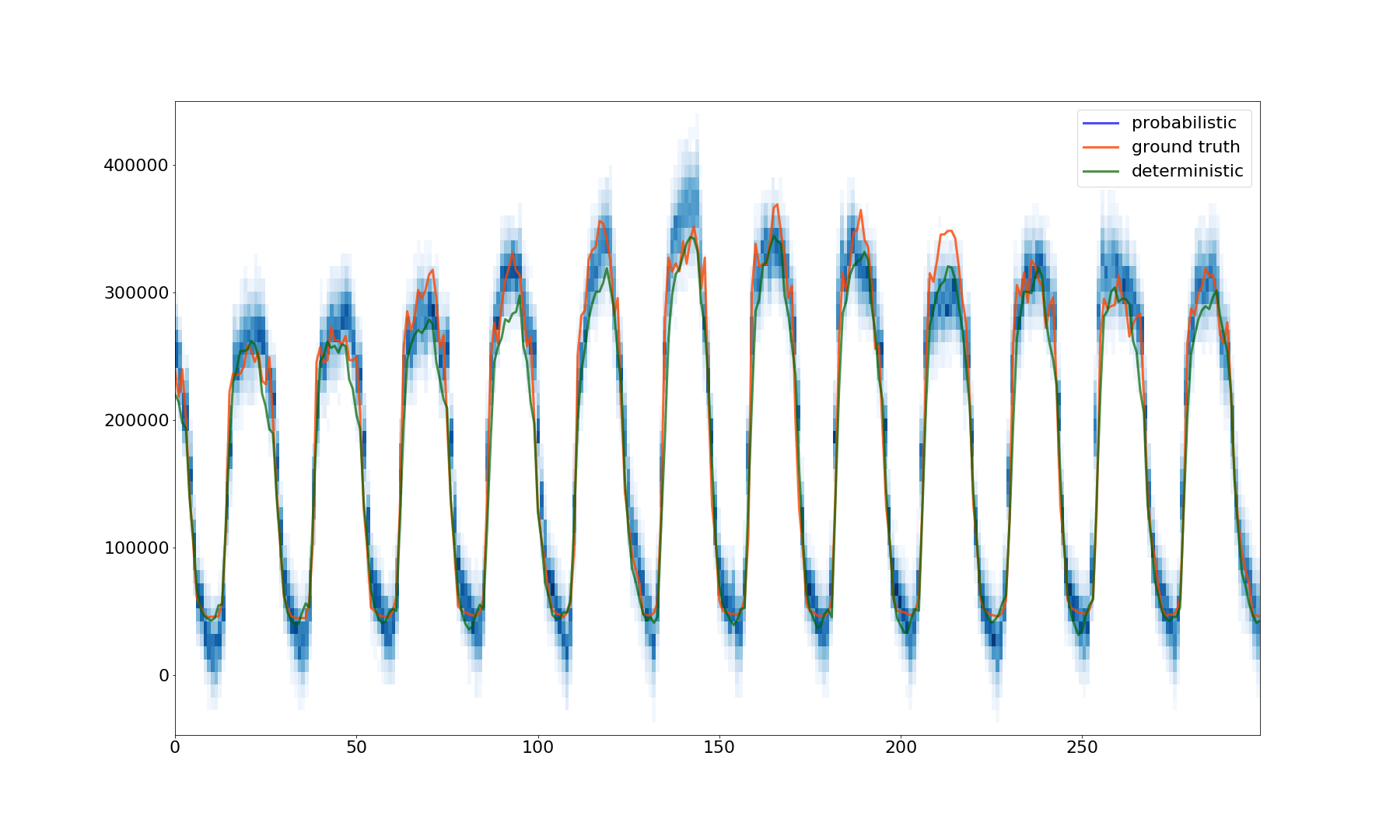

If you like it GAN it!

In this project, we introduced probcast - a novel probabilistic model for multivariate time-series forecasting. We employ a conditional GAN framework to train our model with adversarial training. Furthermore, we propose a framework that lets us transform a deterministic model into a probabilistic one with improved performance. The motivation of the framework is to either transform existing highly accurate point forecast models to their probabilistic counterparts or to train GANs stably by selecting the architecture of GAN's component carefully and efficiently.

Read the If you like it GAN it! paper!

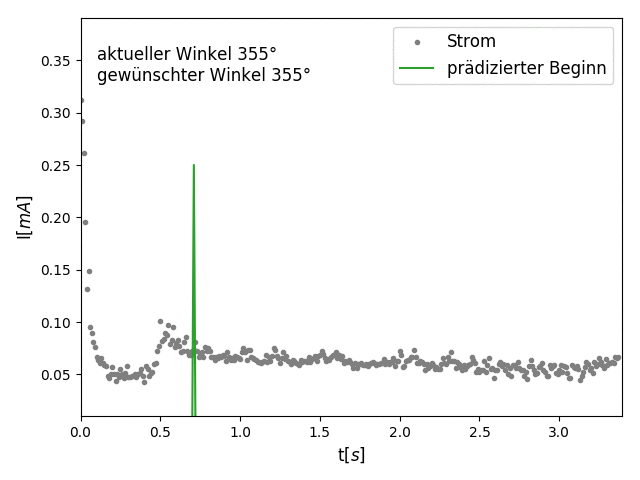

DeepValve

DeepValve demonstrates how machine learning can be utilized to control sensorless processes as they occur in many large scale production plants. The valve has no inner sensors, but at the same time the response to its control signals include highly non-linear processes. However, the appearing patterns share similarities, therefore learning them is possible.

Neural Architecture Search

This is a collection of projects: Task Agnostic Neural Architecture Search, Neural Architecture Search and Swarm Optimization via Transfer Learning

Task Agnostic Neural Architecture Search (NAS) for time series with Reinforcement Learning

Task Agnostic NAS trains a policy with multiple training tasks and will be generalized enough to predict optimal network for test task with minimal policy update. Ultimately, the training process will understand the global policy by transferring knowledge between child policies.

Neural Architecture Search and Swarm Optimization via Transfer Learning

Deep learning has gained ground in the recent years due to its immense success in solving perceptual tasks. But over the time, designing deep neural architectures manually has become an inflating challenge to human engineers. This problem has led to the research on Neural Architecture Search (NAS) which is an automated process that aims to discover the best performing neural network architectures for a specific problem.

NAS has predominantly been implemented using Evolutionary Algorithms, Bayesian Optimization and Reinforcement learning. Most of these approaches are highly computationally expensive and work for specific tasks defined.

Our aim for this project is to come up with an NAS method based on Swarm Optimization technique that can efficiently design neural networks across various tasks for Time Series by using transfer learning strategy.

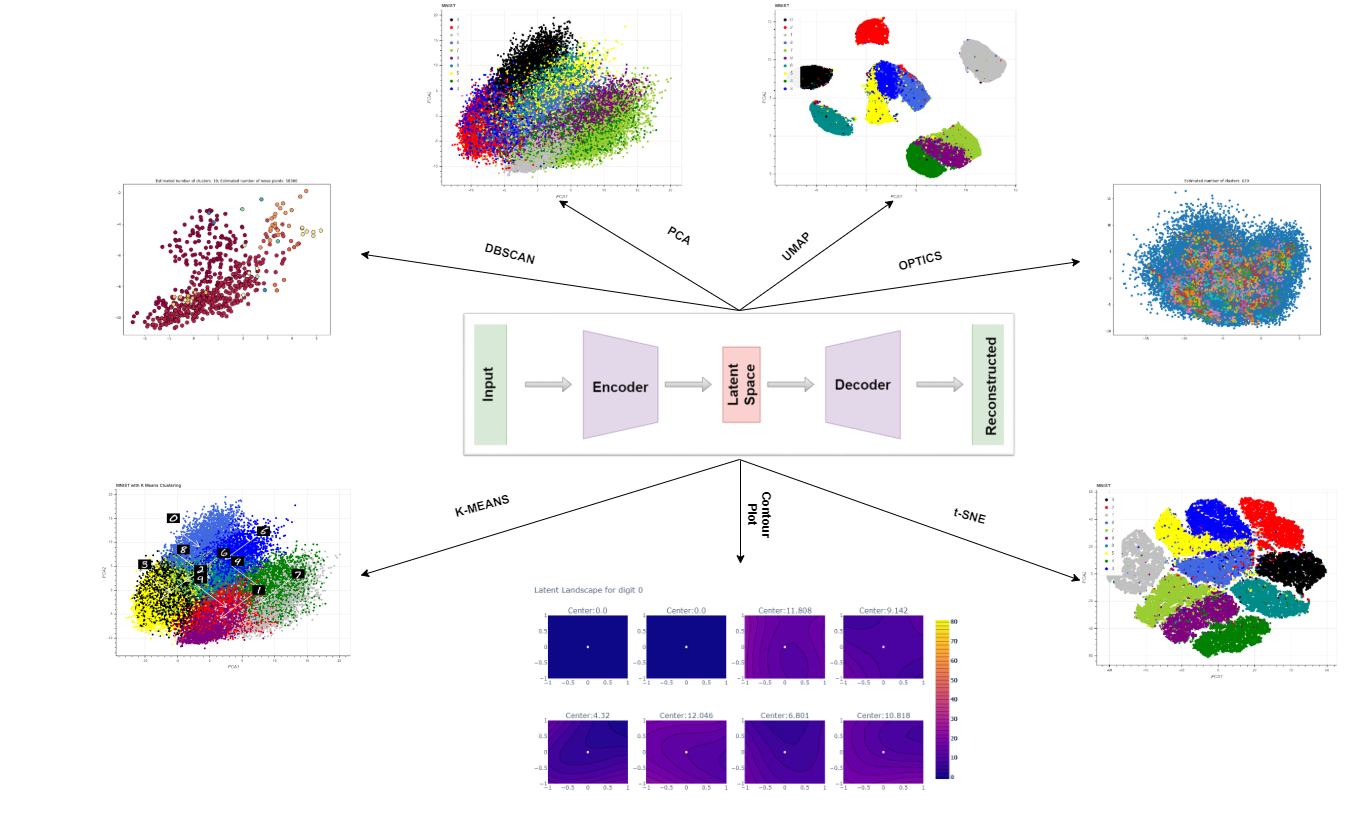

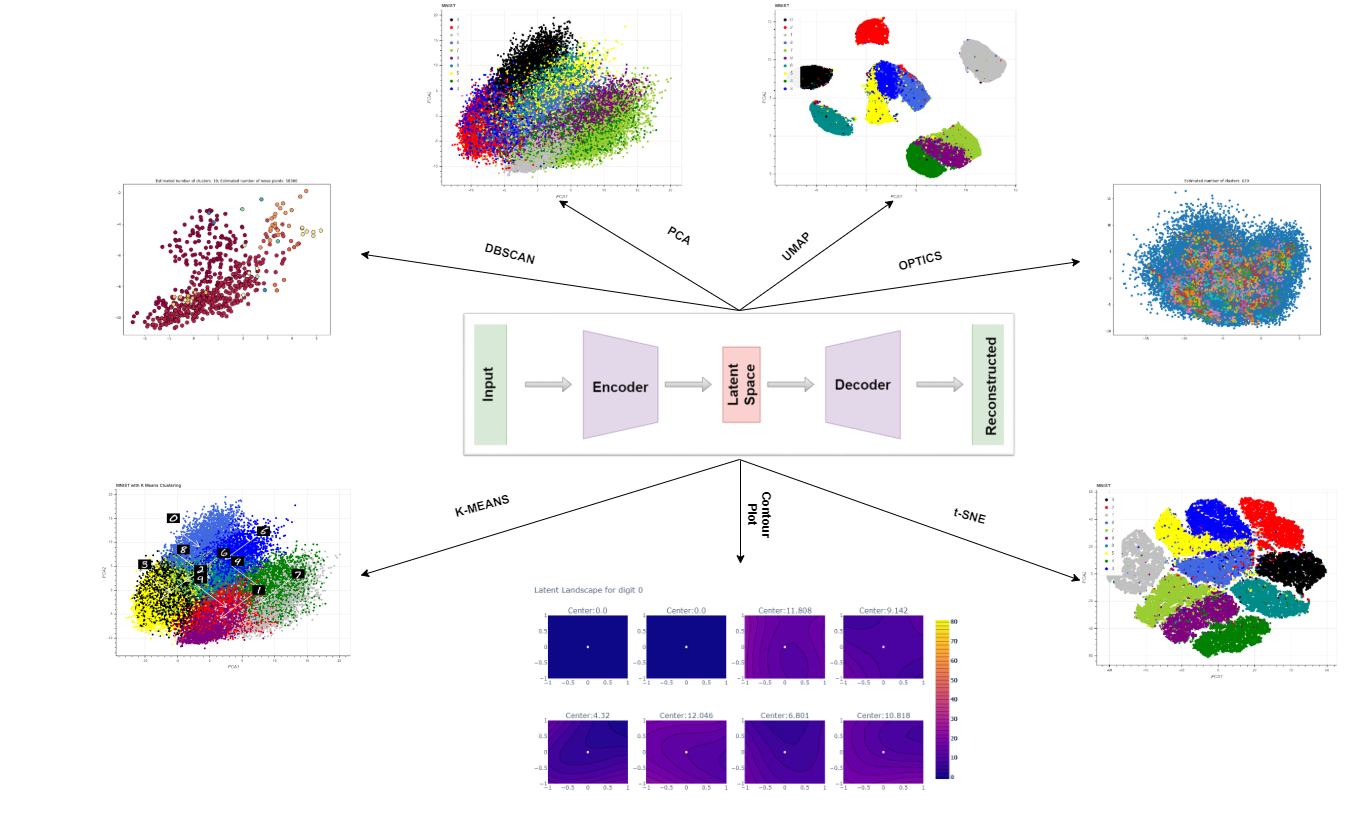

Latent Space Visualization

Autoencoders are artificial neural networks, that can be used to learn efficient encoding of unlabeled high dimensional data. This encoding or the latent space is like a black box, and we can explore this black box through different visualization and clustering methods. They provide an insight and improved understanding of our unlabeled high dimensional data.

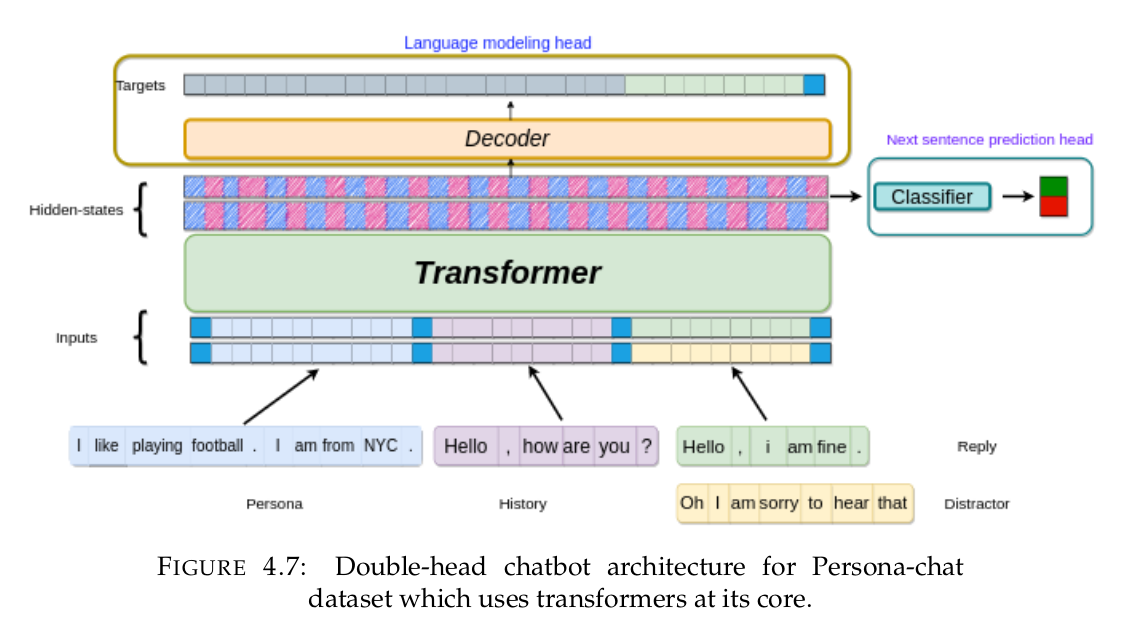

Conversational AI

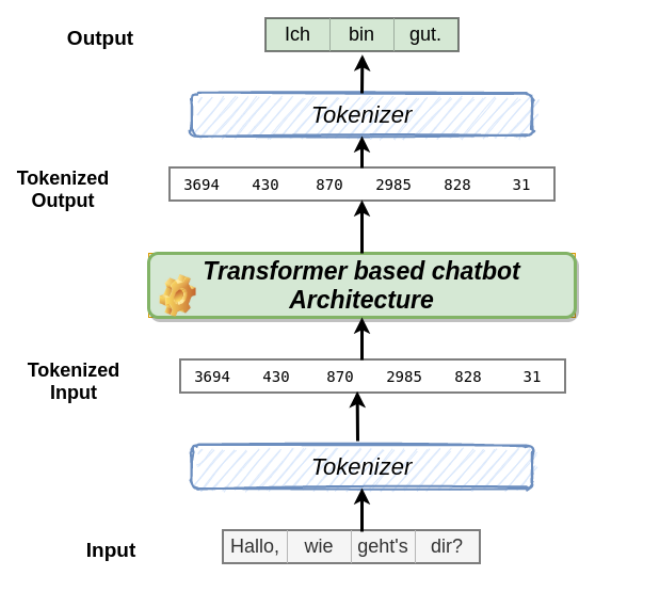

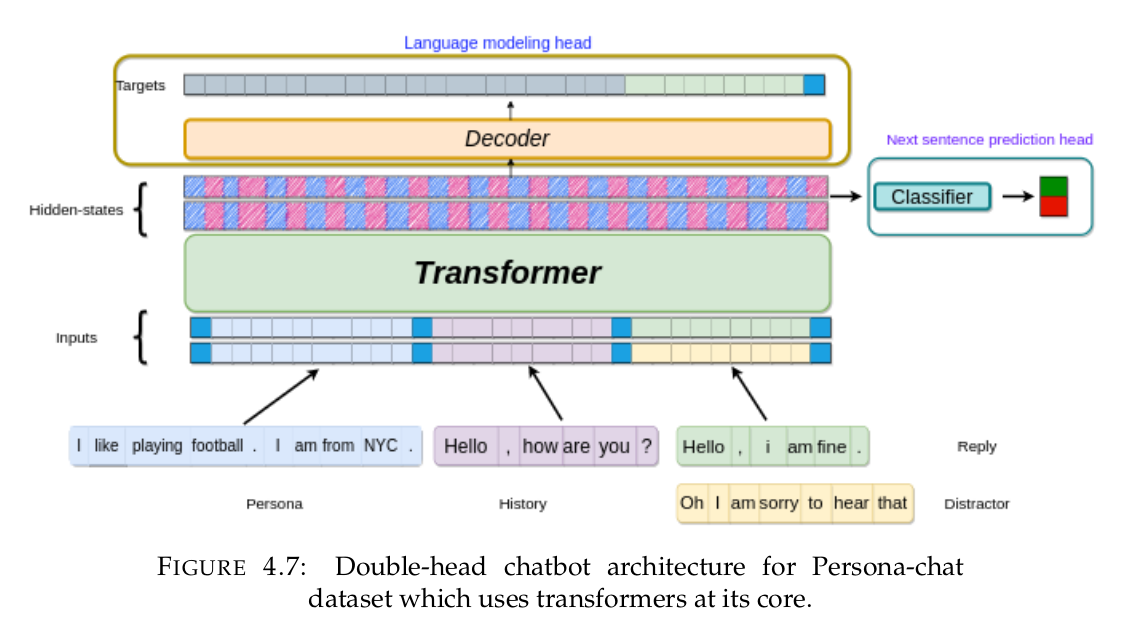

In this thesis, we explored the concept of transformer[16] based chatbots in the Ger- man language. In the first phase, we first explored state-of-the-art architectures in recent years. The architectures we explored were mostly transformer-based. On analysis, we found that a transformer model with an Auto- regressive objective is superior to Masked language modeling for chatbot purposes. Chatbot also is a language generation model similar to Auto-regressive language model. In the next phase, we explored the dataset for the German language. Having a rich informative dataset along with the powerful model plays a role in making the bot reply well. On initial analysis, we observed that most of the Chatbot datasets were in English language and there were very few simple Ger- man datasets for chatbot. To overcome the scarcity of datasets, we tried different approaches like web scraping using beautiful soup and selenium for dif- ferent websites, extracting conversations from Reddit, and transformer-based translation of English chatbot datasets to German language. After careful analysis of the approaches we tried, for the scope of this thesis, we use the translated dataset for our experiments. We provide the scripts for all the mentioned approaches for further research. Also, we will be making our translated datasets available for further research and corrections in translation. Finally, we developed double-head model with language head and classification head, and single-head end-to-end trainable German chatbot architectures with clear and minimal code using PyTorch-ignite and hugging-face transformers inspired by the state-of-the-art analysis. We developed the models for persona-chat format and a general Multi-turn dataset. The results show the potential of trans- formers in German chatbot applications. The GPT-2 based double head architecture achieved perplexity of 14.6 compared to 20.5 in the English language. To my knowl- edge, this is the first time German pre-trained transformers like GPT2, BERT is used for Chatbot purpose using double head architecture. The conversation examples shown that adding a persona to chatbot keeps the user engaging and conversation interesting. Also, double-head models converse better then single-head models showing the additional classification head helping improve results by capturing global context. We also applied the double head architecture to English datasets and the conversations as shown seem to be interesting.

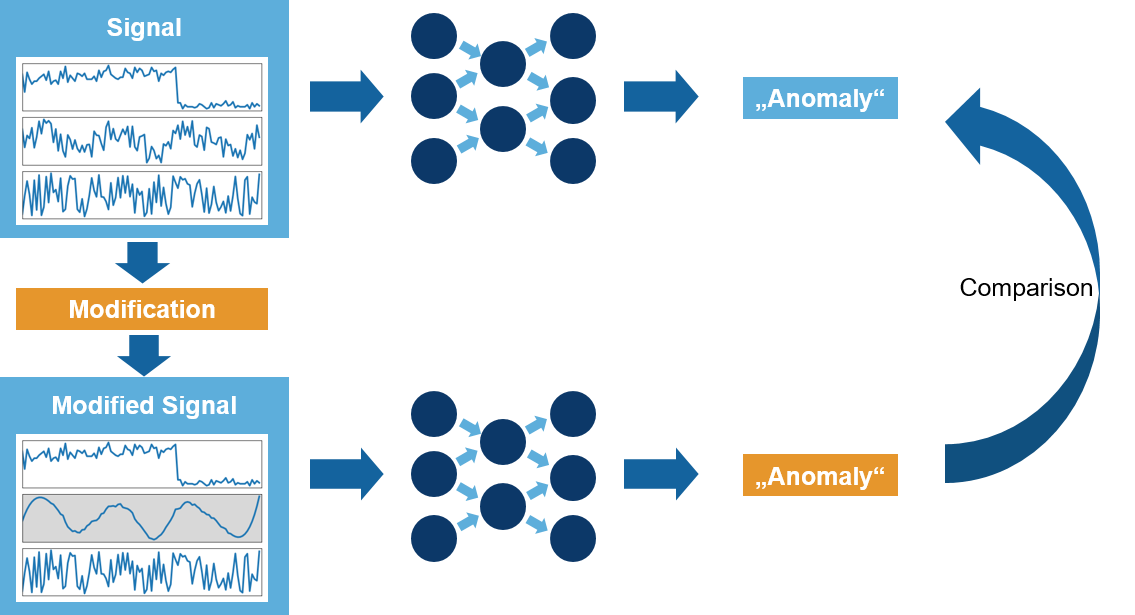

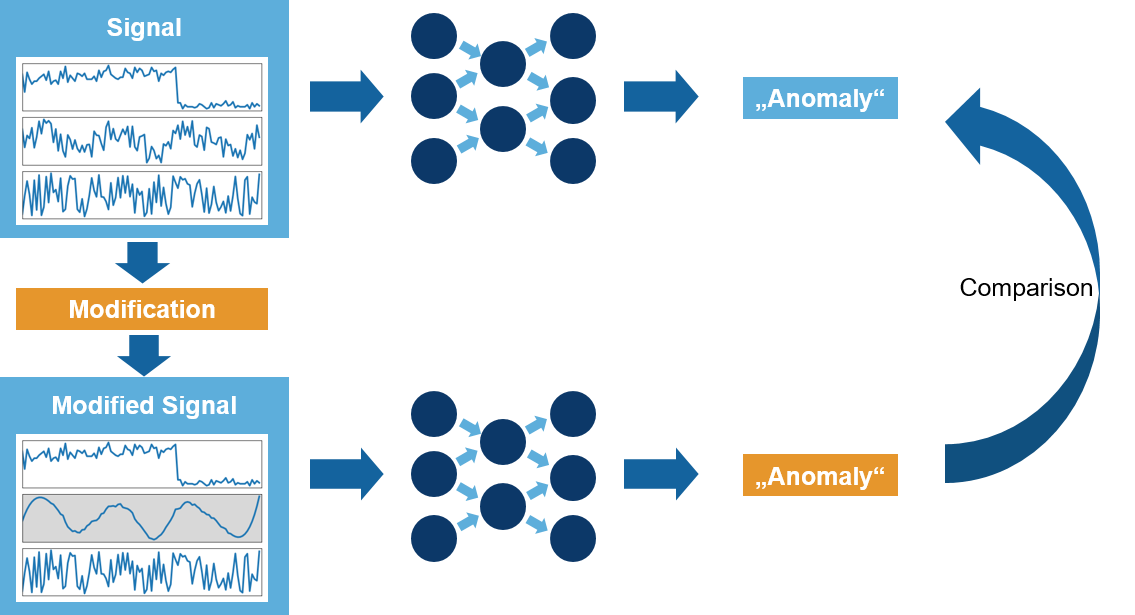

Conceptual Explanation

Conceptual Explanations of Neural Network Prediction for Time Series

Conceptual Explanation is a model agnostic approach to uncover the effects of abstract (local or global) input features on the model behavior. It allows utilizing expert knowledge and has been designed specifically for the time series domain.

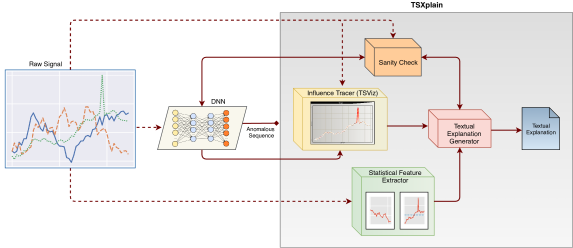

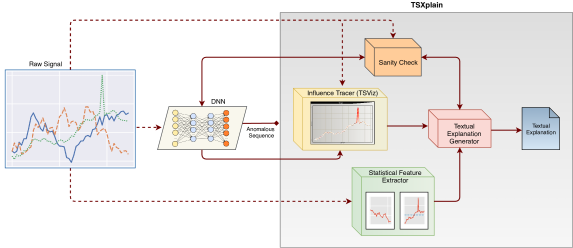

TSXPlain

Neural networks (NN) are considered as black boxes due to the lack of explainability and transparency of their decisions. This significantly hampers their deployment in environments where explainability is essential along with the accuracy of the system. Recently, significant efforts have been made for the interpretability of these deep networks with the aim to open up the black box. However, most of these approaches are specifically developed for visual modalities. In addition, the interpretations provided by these systems require expert knowledge and understanding for intelligibility. This indicates a vital gap between the explainability provided by the systems and the novice user. To bridge this gap, we present a novel framework i.e. Time-Series eXplanation (TSXplain) system which produces a natural language based explanation of the decision taken by a NN. It uses the extracted statistical features to describe the decision of a NN, merging the deep learning world with that of statistics. The two-level explanation provides ample description of the decision made by the network to aid an expert as well as a novice user alike. Our survey and reliability assessment test confirm that the generated explanations are meaningful and correct. We believe that generating natural language based descriptions of the network’s decisions is a big step towards opening up the black box.

Using DFKI's tool TSViz we propagate an aproach to get textual explanations which link the found network result with possible causes in the input data. TSViz will identify the interesting regions in the input data while a statistical mapping selects possible structural causes.

Read the paper!

DeepCFD

Computational Fluid Dynamics (CFD) simulation by the numerical solution of the Navier-Stokes equations is an essential tool in a wide range of applications from engineering design to climate modeling. However, the computational cost and memory demand required by CFD codes may become very high for flows of practical interest, such as in aerodynamic shape optimization. This expense is associated with the complexity of the fluid flow governing equations, which include non-linear partial derivative terms that are of difficult solution, leading to long computational times and limiting the number of hypotheses that can be tested during the process of iterative design. Therefore, we propose DeepCFD: a convolutional neural network (CNN) based model that efficiently approximates solutions for the problem of non-uniform steady laminar flows. The proposed model is able to learn complete solutions of the Navier-Stokes equations, for both velocity and pressure fields, directly from ground-truth data generated using a state-of-the-art CFD code. Using DeepCFD, we found a speedup of up to 3 orders of magnitude compared to the standard CFD approach at a cost of low error rates.

Read the paper!

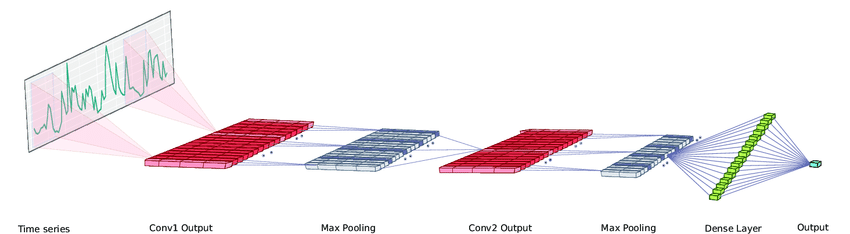

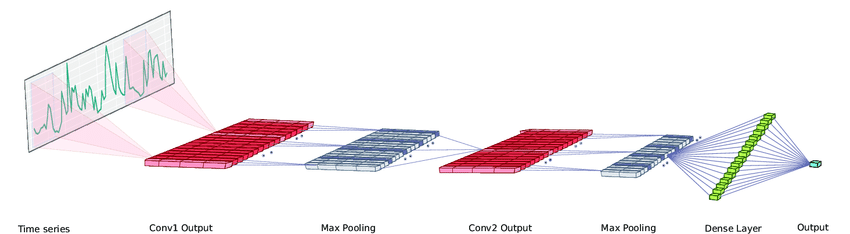

DeepAnT

Traditional distance and density-based anomaly detection techniques are unable to detect periodic and seasonality related point anomalies which occur commonly in streaming data, leaving a big gap in time series anomaly detection in the current era of the IoT. To address this problem, we present a novel deep learning-based anomaly detection approach (DeepAnT) for time series data, which is equally applicable to the non-streaming cases. DeepAnT is capable of detecting a wide range of anomalies, i.e., point anomalies, contextual anomalies, and discords in time series data. In contrast to the anomaly detection methods where anomalies are learned, DeepAnT uses unlabeled data to capture and learn the data distribution that is used to forecast the normal behavior of a time series. DeepAnT consists of two modules: time series predictor and anomaly detector. The time series predictor module uses deep convolutional neural network (CNN) to predict the next time stamp on the defined horizon. This module takes a window of time series (used as a context) and attempts to predict the next time stamp. The predicted value is then passed to the anomaly detector module, which is responsible for tagging the corresponding time stamp as normal or abnormal. DeepAnT can be trained even without removing the anomalies from the given data set. Generally, in deep learning- based approaches, a lot of data are required to train a model. Whereas in DeepAnT, a model can be trained on relatively small data set while achieving good generalization capabilities due to the effective parameter sharing of the CNN. As the anomaly detection in DeepAnT is unsupervised, it does not rely on anomaly labels at the time of model generation. Therefore, this approach can be directly applied to real-life scenarios where it is practically impossible to label a big stream of data coming from heterogeneous sensors comprising of both normal as well as anomalous points. We have performed a detailed evaluation of 15 algorithms on 10 anomaly detection benchmarks, which contain a total of 433 real and synthetic time series. Experiments show that DeepAnT outperforms the state-of-the-art anomaly detection methods in most of the cases, while performing on par with others.

Read the paper!

The Mentors

Andreas Dengel

Head of Smart Data & Knowledge Services (DFKI)

Matthias Schultalbers

Head of Business Area Future Powertrain (IAV)

Our Team

Sheraz Ahmed

Sheraz Ahmed is a Principal Researcher at the German Research Center for Artificial Intelligence (DFKI) and provides expertise, research consultation, and operational direction for the activities in FLAP. His research focuses on explainable AI, time-series analysis (forecasting, regression, anomaly detection) both in deterministic and probabilistic fashion.

See also:

Peter Schichtel

Theoretical physicist by training Peter is now the team leader of the IAV squad stationed at DFKI. His current research interest is focused on the different ways to generate and control neural networks for time series applications. Furthermore, he investigates how to utilize probabilistic time series analysis in the automotive context.

See also:

Alireza Koochali

Alireza Koochali received his master’s degree in computer science from the University of Kaiserslautern, Germany. His master’s thesis was on Multimodal Sentiment Analysis with Deep Neural Networks. He is currently pursuing the Ph.D. degree in computer science at FLAP under the supervision of Prof. Dr. Prof. h.c. A. Dengel. His research interests include probabilistic machine learning, generative adversarial networks, deep neural networks, and time series analysis.

See also:

Philipp Engler

Philipp Engler is a PhD student at the German Research Center for Artificial Intelligence (DFKI) under the supervision of Prof. Dr. Prof. h.c. Andreas Dengel. He received the Master’s degree in computer science and a Bachelor’s degree in Physics at the University of Kaiserslautern (TUK). His current research interests include Self-Supervised Learning, Deep Neural Networks and Time Series Analysis.

Summra Saleem

Summra Saleem is a Ph.D. student at the German Research Center for Artificial Intelligence (DFKI). She earned her Master’s degree and a Bachelor’s degree in Computer Science at the University of Engineering & Technology, Lahore Pakistan. Her current research interests include Natural Language Processing, Probabilistic Modeling, Deep Neural Networks and Automation of Software Engineering processes.

Seyede Ensiye Tahaei Shiyade

Ensiye Tahaei is an ML engineer at the German Research Center for Artificial Intelligence (DFKI) and holds a Master's degree in Computer Science from TU Kaiserslautern. With a background in deep neural networks, time-series analysis for forecasting and anomaly detection, she brings her knowledge to her role at FlaP, where she applies her scientific expertise to the practical projects of the team.

Alumni

Ferdinand Küsters

Ferdinand was a research engineer at IAV. His work at FLAP focused on deep learning for time series, physics-based machine learning and AutoML.

See also:

Mateus Dias Ribeiro

Mateus Dias Ribeiro was a senior researcher at the German Research Center for Artificial Intelligence (DFKI) working in the fields of machine learning and computational fluid dynamics. His research focuses on time-series analysis and the construction of reduced-order models for fluid flows using neural networks.

See also:

Carlos Rojas

Carlos Rojas received his master's degree from the University of Campinas in mechanical engineering. His master's thesis was on the application of physics informed neural networks to solve inverse problems related to solid mechanics.

See also:

Mohsin Munir

Mohsin Munir received the master’s degree in computer science from the University of Kaiserslautern, Germany. The topic of his master’s thesis was Connected Heating System’s Fault Detection using Data Anomalies and Trends. His research topic is Time Series Forecasting and Anomaly Detection. His research interests are time series analysis, deep neural networks, forecasting, predictive analytics, and anomaly detection.

See also:

Maria Walch

Currently, Maria is a PhD student at the AG for Algebra, geometry and computer algebra of TUK in cooperation with the German Research Center for Artificial Intelligence Kaiserslautern. Maria's research focusses on creating a synergetic bridge between deep learning and persistent homology. Maria is especially interested in representation learning and theory, time series analysis and chaotic and complex systems. Being also an undergraduate Law student, Maria is also curious about digitalization project within legal contexts.

See also:

Zahra Soleimani

Zahra is a master's student in Computer Science at the Technical University of Kaiserslautern. Zahra's research interests include probabilistic machine learning, generative models, and sequence models. Currently, she is working on probabilistic multistep-ahead time series forecasting utilizing generative adversarial networks, sequence to sequence learning models, and attention mechanisms.

See also:

Fabian von der Warth

Fabian is a student in Mathematics at TU Kaiserslautern. As an undergraduate, he helps out in the FLaP on various smaller research topics and the FlaP website. His personal interests include Reinforcement Learning and Computer Chess.

Tushara Devi Bhogdai Ravishankar

Tushara Devi Bhogadi Ravishankar is a master's student in Computer Science at the Technical University of Kaiserslautern. Tushara's research interests include Neural Architecture Search, Swarm Optimization Techniques and deep learning for time series. Currently, she is working on the use of Neural Architecture Search methods with Swarm Technology to solve multi-task Time Series problems as a part of her master thesis.

See also:

Sijan Bhandari

Sijan is studying for a master's degree in Computer Science at Technical University of Kaiserslautern, with specialization in intelligent systems and data visualization. He is interested in AutoML and currently focused on the applications of Reinforcement learning to automate Neural Architecture Search (NAS) for time series data as his thesis topic. His further research interests include Reinforcement Learning, time series analysis, and model interpretability.

See also:

Amala Paulson

Amala is a master's student in Computer Science at Technical University of Kaiserslautern. Amala's areas of interest are Machine Learning and Deep Learning. She is currently pursuing her research on Autoencoders, their latent representations and exploring autoencoders through visualization techniques as part of her Master Thesis.

See also:

Vikas Rajashekar

Vikas Rajashekar is a master's student of Computer Science at TU Kaiserslautern. His research interests are Natural Language Processing(NLP). He is currently working on his thesis which aims at developing German Chatbots using Transformers.

See also:

Anuj Maharjan

Anuj Maharjan is a master's student of Computer Science at TU Kaiserslautern. His research interests include AutoML and visualization of neural networks. He is currently working on differentiable architecture search for time series domain.

Akshatha Balakrishna

Master thesis: DeepValveOccupation: Software Engineer at Elektrobit

Stephan Helfrich

ForBaye PhD Thesis with AG Optimierung TI Kaiserslautern

Sankrutyayan Thota

Master thesis: Assessment of various metric for Probabilistic Time series analysis

Hooman Tavakoli

Master thesis: Probabilistic Regression with CGAN

Contact Us

Interested in our work? Send us a message!